Automated Vigor Estimation on Vineyards

Estimación automatizada del vigor en viñedos

ACI Avances en Ciencias e Ingenierías

Universidad San Francisco de Quito, Ecuador

Received: 23 June 2021

Accepted: 12 November 2021

Abstract: Estimating the balance or vigor in vines, as the yield to pruning weight relation, is a useful parameter that growers use to better prepare for the harvest season and to establish precision agriculture management of the vineyard, achieving specific site planification like pruning, debriefing or budding. Traditionally growers obtain this parameter by first manually weighting the pruned canes during the vineyard dormant season (no leaves); second during the harvest collect the weight of the fruit for the vines evaluated in the first step and then correlate the two measures. Since this is a very manual and time-consuming task, growers usually obtain this number by just taking a couple of samples and extrapolating this value to the entire vineyard, losing all the variability present in theirs fields, which imply loss in information that can lead to specific site management and consequently grape quality and quantity improvement. In this paper we develop a computer vision-based algorithm that is robust to differences in trellis system, varieties and light conditions; to automatically estimate the pruning weight and consequently the variability of vigor inside the lot. The results will be used to improve the way local growers plan the annual winter pruning, advancing in the transformation to precision agriculture. Our proposed solution doesn't require to weight the shoots (also called canes), creating prescription maps (de-tail instructions for pruning, harvest and other management decisions specific for the location) based in the estimated vigor automatically. Our solution uses Deep Learning (DL) techniques to get the segmentation of the vine trees directly from the image captured on the field during dormant season. Results show that we can obtain basically equivalent interpolation maps between our method and the validation set obtained by manually weighting the pruning weight.

Resumen: La estimación delvigor en las vides (peso de recolecion de uvas / peso de poda), es un parámetro útil que los productores utilizan para prepararse mejor para la cosecha y para establecer un plan de agricultura de precisión, lograr una mejor planificación de la zona de cultivo, como por ejemplo, poda o fertilizacion. Tradicionalmente, los cultivadores obtienen este parámetro pesando primero manualmente las cañas podadas durante la temporada de inactividad del viñedo (sin hojas); segundo, durante la cosecha, recolectando el peso de la fruta en las cepas evaluadas en el primer paso y depues correlacionar las dos medidas. Dado que se trata de una tarea muy manual que requiere mucho tiempo, los viticultores suelen obtener este número solo tomando un par de muestras y extrapolando este valor a todo el viñedo, perdiendo toda la variabilidad presente en sus campos, lo que implica una pérdida de información que puede llevar a peor calidad y cantidad de la uva. En este artículo desarrollamos un algoritmo basado en visión por computadora que es robusto a las diferencias en el sistema de trellis, a variedades y condiciones de luz ambiental; para estimar automáticamente el peso de poda y consecuentemente la variabilidad de vigor dentro del lote. Los resultados se utilizarán para mejorar la forma en que los productores planifican la poda anual de invierno, avanzando en la transformación hacia la agricultura de precisión. Nuestra solución propone crear mapas de prescripción (instrucciones detalladas para la poda, cosecha y otras decisiones de manejo específicas para la ubicación) automaticamente, basados en el vigor obtenido de procesar las fotografias dela vid. Nuestra solución utiliza técnicas de Deep Learning(DL) par a obtener la segmentación de los árboles de vid directamente de la imagen capturada en el campo durante la temporada de inactividad. Los resultados muestran que podemos obtener mapas de interpolación básicamente equivalentes entre nuestro método y el conjunto de validación obtenido ponderando manualmente el peso de la poda.

Palabras clave: Inteligencia Artificial, Segmentacion Imagenes, Automatizacion Agricola.

INTRODUCTION

Recent advances in agricultural management have dramatically improved agriculture around the world with the incorporation of automated process of field data. These advances are partially due to the ability to adapt to local factors that influence crop yield such as climate, growing region and soil type. As a result, a wide range of plant densities and training/trellis systems are used by growers to optimize harvest practices. In viticulture a primary consideration when selecting the proper trellis system is the vine vigor. Highly vigorous vines require larger trellising systems, more space or a devigorating rootstock compared to low-vigor vines. Traditionally the vigor or also known as the vine balance value, is estimated using the RAVAZ Index [17], obtained by growers and researchers by manual weighing the pruning during the dormant state and correlating it to the harvest weight (around six month after pruning). Since the pruning happens at specific times, for example in California is in February, growers can only get a first estimate of these values after the pruning months. Another problem with this method is that it requires expensive manual labor, since in order to be effective many samples needs to be taken in different areas in the vineyard to capture all variations naturally occurring on every vineyards and therefore allow the management techniques to adapt to these variations.

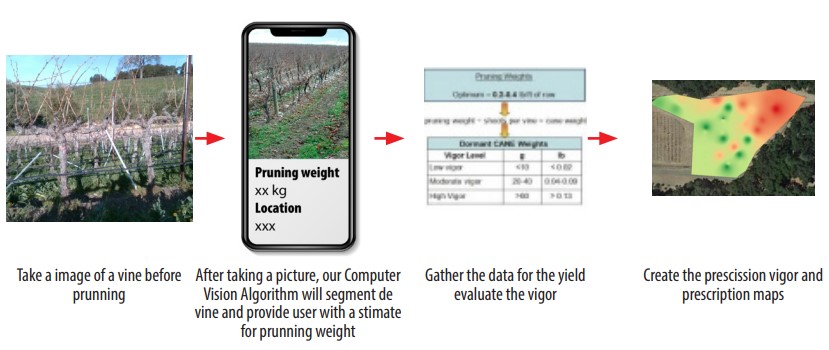

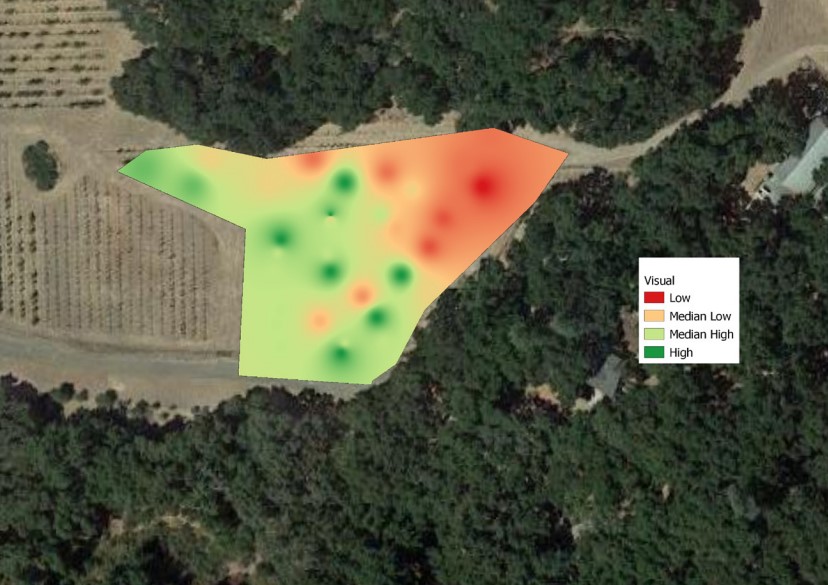

Fig. 1.

On-Site Vigor Estimation and Vigor Maps

In this paper rather than using manual methods to estimate vigor that require intensive labor we will use algorithms based on computer vision. In our solution we will take images with a regular smart mobile phone camera and then perform image segmentation to evaluate in-situ the weight of the canes from the segmentation. Our method has several advantages over the methods currently in use. First, it doesn't destroy or affect the plant in anyway, and second, results will be available immediately after taking the picture. Since almost everybody has a smart phone with a camera, growers will be able to take a picture of their vine and get immediate feedback with the expected vigor for the plant without the need for expensive equipment. This will allow us to create specific vigor maps like the ones shown in Figure 1, maps that can be used to adapt the local conditions of their vineyards to specific management, and improve not only production but also the quality of the harvest.

Previous Work

ne of the main challenges being studied by the scientific community in viticulture is early yield prediction, in order to obtain this value directly from images we need to do accurate segmentation of the vine. Tree segmentation is particularly difficult since trees usually contain lots of texture, the pixels colors of the background are similar to the foreground and usually grow in close groups, so it is difficult to differentiate where one tree ends. There are several papers that study tree segmentation. In [18] presents a solution for tree segmentation from a complex scene. The proposed algorithm is mainly composed of a preliminary image segmentation, a trunk structure extraction, and a leaf regions identification process. Modeling the extraction of trunk structure as an optimization problem, where an energy function is formulated according to the color, position, and orientation of the segmented regions. In [12], a trunk and branch segmentation method were developed using Kinect V2 sensor and deep learning based semantic segmentation. Kinect was used to acquire point cloud data of the tree canopies in a commercial apple orchard. Depth and RGB information extracted from the point cloud data were used to remove the background trees from the RGB image. Then trunk and branches of the tree that share the common appearance and features were segmented out using a convolutional neural network (SegNet) with an accuracy of 0.92. In [8] in order to obtain three-dimensional information of the apple branch obstacle, the binocular stereo vision localization method for apple branch obstacle is proposed. In [4] studies the ratio between crown and truck diameters on tree images and presents a new crown-truck segmentation method, in order to extract automatically the most significant feature, the ratio between crown and truck diameters. In [9] a method for pruning mass estimation using computer vision is proposed, the images are taken with a specific stereo vision camera to create the depth map; their algorithm lacks robustness to different light conditions.

There are also several research areas in agriculture that are using computer vision techniques in general to boost productivity of different crops, [1]-[15]. Specific to grapes for wine, in article [1] an application is developed to evaluate canopy gaps in vine by using computer vision feature extraction algorithms. Canopy porosity is an important viticulture factor because canopy gaps favor fruit exposure and air circulation, both of which benefit fruit quality and health. The algorithm used for this work is based in feature extraction which are prone to mistakes when the conditions for light, variety of fruits, and other factors change. Research paper [2], uses also computer vision techniques but in this case to assess the flower number per inflorescence, they develop an application that allows growers to take pictures of the vine inflorescence and output the number of flowers. In these works [1]-[15] feature extraction algorithms are used, in general these algorithms are less robust and produce inconsistent results with small variations of outside conditions time of day and weather conditions. Deep Learning or Neural Network for computer vision have also been used in vine but mainly to classify different vine species as the one presented in [3]-[15].

There are several papers discussing Deep Learning (DL) implementations in semantic segmentation ([11]) where every pixel in the image gets a category label without differentiating between different objects of same category, and instance segmentation ([6]) where a segmentation of each object in the image is created. In our specific problem we do need instance segmentation.

In our proposed research we don't use expensive equipment, our experience with farmers especially the smaller ones is that they don't buy expensive or complicated software. We did talk to several local farms to perform validation of our research on real commercial locations and they are very enthusiastic about the idea of being able to use their phones to create prescription maps.

Fig. 2

Original Image taken on the Vineyard

Paper Structure

The remainder of this paper is organized as follows. Section 2 gives an overview of the methodology we are proposing for cane segmentation. Section 3 we provide a summary of the results obtained, and in Section 4 we present the conclusions and future work.

METHODOLOGY

In this section we explain how to obtain a robust segmentation of the vine, as it can be seen in Figure 2 when we capture the images with the phone, the background pixels contains very similar color pixels to the vine we are trying to segment, which makes the background subtraction difficult. Initially, to make sure we have reliable results and to be able to do validation, we took two pictures of each of the vines in the vineyards. One picture with a white background Figure 3, and a second picture without the background. We did also obtained the pruning weight of each of the photographed vines manually.

Fig. 3.

Vine Segmentation with artificial white background a)No Background b)With background

These pictures with artificial background are clearly easier to segment since they have a big contrast between the vine and the artificial background. Nevertheless, since the pictures are taken outside with variable light conditions the solution was not as simple as just doing color segmentation, since we needed to first erase some of the shadows, poles and different artifacts that produce inaccurate results. After doing a histogram color correction we did apply the watershed [10] segmentation algorithm. Results can be seen on Figure 4, and with more details in Section 3.

The accuracy ofthese segmentation compared with the manual weight is high but there is an obvious problem with this approach, it requires an artificial background which is at the very least inconvenient since needs two people to get pictures. The results are good but we do want to also make the creation of the maps as easy as possible to the grower so in the next section we explore how to do the same but without the artificial background.

Segmentation without artificial background

Deep Learning solutions for image segmentation have been extensively studied in the past years, for applications that range from skin cancer detection to au tonomous cars. There are several well know segmentation's models that are open source and free to use. We tested Mask R-CNN [6] using the implementation in [5] with some modifications (since we know the vine should be in the center of the image and should only be one segmented per image), the segmentation mask produced overestimated the cane weights significantly and was too rough of a segmentation to even be of any use.

Fig. 4.

Vine Segmentation with artificial white background a)Original b)Segmentation

Fig. 5.

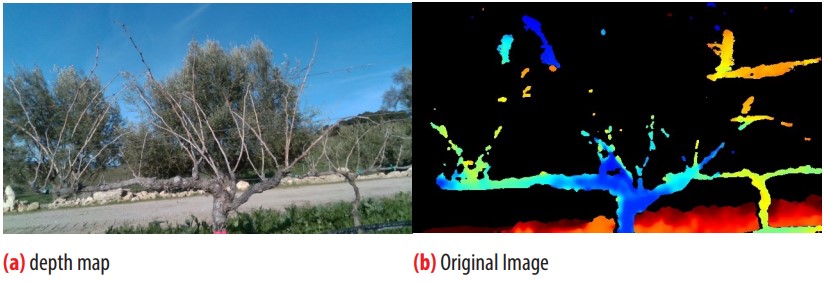

a) Original b) Depth Map Calculated with Disparity or DepthSensor c)Trimap

The problem is that tree branches are difficult to segment since background pixel color are very similar to the foreground. We need a more accurate segmentation similar to the ones used for image matting and in particular the solution proposed in [19]. In [19] the deep model has two parts. The first part is a deep convolutional encoder-decoder network that takes an image and the corresponding trimap (image with just three colors, background, foreground and border) as inputs and predict the alpha matte of the image, Figure 5. The second part is a small convolutional network that refines the alpha matte predictions of the first network to have more accurate alpha values and sharper edges.

To be able to use these solution for segmentation we need to obtain the trimaps of the vines. Since we have the limitation of only using smart phones to obtain the images , to create these trimaps we will use the depth map sensors that comes with most smart phones cameras as described next.

Depth Maps Most smart phones have at least two cameras on the back, these cameras allow the implementation of software solutions to create depth maps (image that contains information about the distance between the surface of objects from a given viewpoint) and with them intelligently blur the background and create professional portrait effects. In this paper we use these depth maps from smart phones to create trimaps images. A trimap image contains three regions: known background, known foreground, and an unknown region. Once we have the trimaps of the vines 2.2 we will train a model that will take the original image and the trimap to accurately segment the vines.

There are two main ways phones can get depth information.

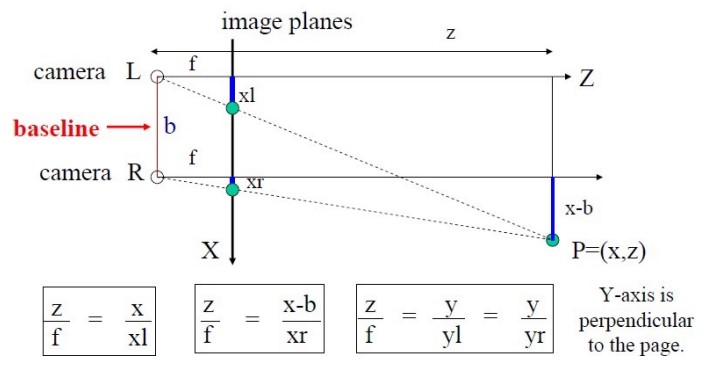

- 1. Disparity. Perception of depth arise from ”disparity” of a 3D given point in your left and right retina. Disparity is the difference in image location of the same 3D point when projected under perspective to two different cameras, Figure 6.

- 2. Depth Sensor. Most phones will also provide various IR sensors to calculate depth, its depth data is much more detailed, particularly at close range. The depth estimation works by having an IR emitter send out 30,000 dots arranged in a regular pattern. They're invisible to people, but not to the IR camera that reads the deformed pattern as it shows up reflected off surfaces at various depths. This is the same type of system used by the original version of Microsoft's Kinect, which was widely praised for its accuracy at the time. The accuracy in the depth map from the Depth sensor is better than the disparity maps but it forces the user most of the time to use the front facing camera and/or to be close (with in half a meter from the vine) which means we can not have the entire plant in one picture. Although some of the latest smart phone models are improving these sensors (example the Samsung 20+) and getting more and more accurate results are longer distances; unfortunately right now with phones on the market we can not use these types of sensors for this project. We do expect that in the future this sensors will be the standard on smart phones which will make our proposed segmentation even more accurate and simple to obtain.

Proposed Algorithm for Segmentation without Artificial Background If smart phone has dual camera or depth sensors that will allow the creation of a depth map:

-

Use the distance measuring toolbox to make sure the phone camera is at 1.5m of the vine

-

Capture the image in portrait mode (this way the depth information is saved together with the RGB image)

-

Separate the depth information from the previous image

-

Create a Trimap with a simple color segmentation based on the depth image.

Threshold the image and anything that reflects a distance larger than 1.5m will be consider background Then applying a simple canny edge detector, we will classify pixels on the edge to be the inter-median and the rest will be part of the vine tree

-

Use alpha matting to refine the segmentation of the cane.

If smart phone doesn't have dual cameras that can be used to generate depth maps. the algorithm is as follows:

-

Ask user to place itself 1m from the vine (if necessary, provide a ruler). - Capture the image

-

Get the user to select points in the image using the method based in [13]. - Create a Trimap based on the above rough segmentation.

-

Use alpha matting to refine the segmentation of the cane.

Fig. 6.

Depth Calculation (Z), by using the baseline (distance between two cameras))

RESULTS

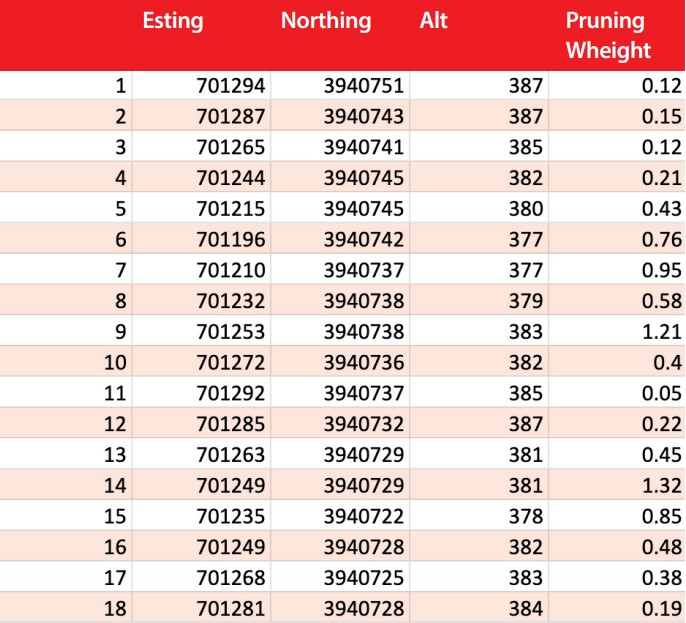

To validate our proposed computer vision implementation, for every image of the vine taken on the field with the smart phone, we also collected GPS location, the altitude, and the pruning weight obtained manually. To obtain this data we did follow the pruning crew in the vineyard taking images before they pruned it and collecting and weighting the canes of each vine after pruning. A sample subset of the data collected is shown in Table 1.

Data Collected

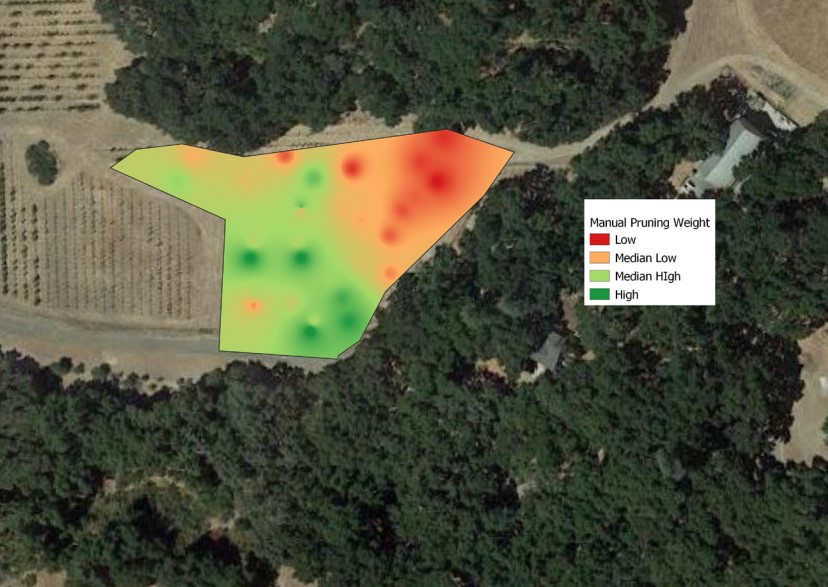

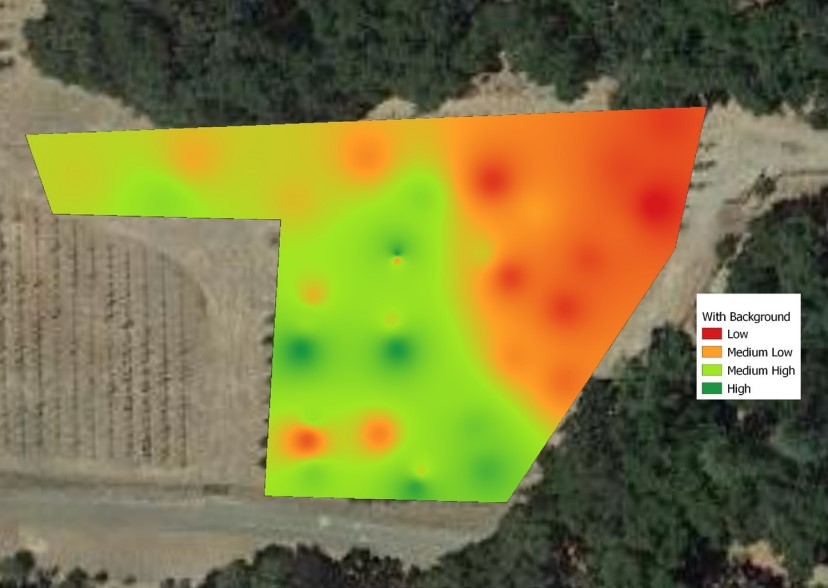

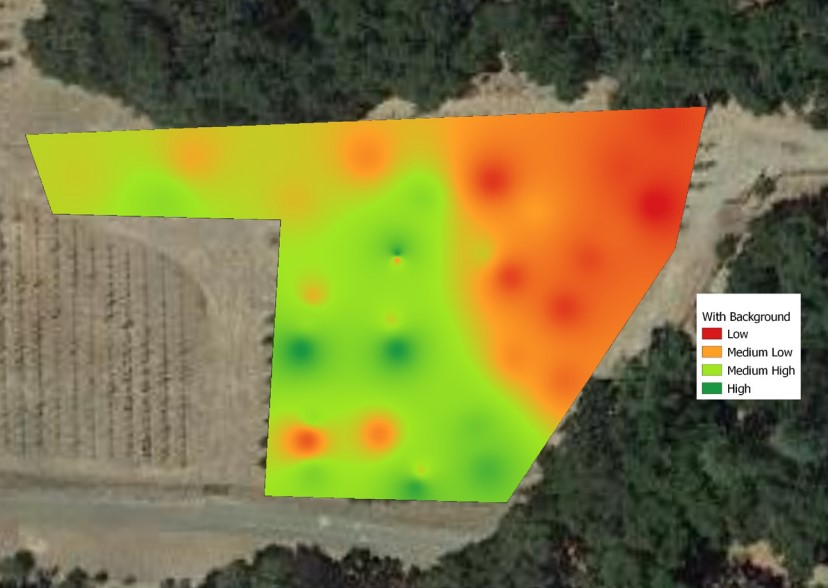

We did use QGIS [16] software to create the pruning weight interpolation maps for the entire vineyard, this maps are key in precision agriculture since they present a visualization of the data collected simplifying the identification of different yield areas(in our case pruning weight). These maps can later be used by the production manager or viticulturist to apply different management instructions for the distinct areas and to easily compare different years production methods effects on the vineyard.

In this project we first create the interpolation map using the manual pruning weights, in Figure 8. In this map we can see two very different areas in the vineyard, the top right one (lower production) and the rest (higher production); this difference maybe due to the inclination of the terrain which is very clear in the altitude data. We want to make clear that in this project we are not giving or evaluating the reason for the difference in areas, we leave this part for a future project were we will take also samples of soil and other data, the main goal of the project is to create the map automatically and faster than the traditional (manual) way. Therefore validation for our project consist on providing and equivalent map to the one shown in Figure 7.

Fig. 7.

Pruning Weight Interpolation Map using Manual Pruning Weight

We did also visually inspect all the images and assign a weight from 1-10 to each of the vines and created a second interpolation map, Figure 8. Value 1 is assigned for lower pruning weight and 10 means high pruning weight. The purpose of this visual inspection was to first get familiarized with the input images, in machine learning is very important to really know and understand your input data, and second to find out if a human can provide a basic estimate of the weight based on just images. If a human can find the pattern than a Machine learning algorithm should be able to do the same given the same input, the images.

Fig. 8.

Pruning Weight Interpolation Map using Manual Pruning Weight

There are small differences between Figure 7, manual pruning weight, and Figure 8, visual inspection of the images. The reason for the differences is that some of the vines canes where left without being pruned to be used as guides for cordons on next season; but for most parts the maps are equivalent for determining different yield zones in the orchard

Results Using Images with artificial Background and Watershed Segmentation

Using the segmentation algorithm described in 2.1 we obtain the pruning weight map from Figure 9. As it can be seen from both interpolation maps, Figure 7 and 9, the areas of different pruning weight are basically equivalent.

There are still some differences but is mainly due to the different pruning techniques applied to some vines were instead of pruning all the canes they left two big ones as cordons or guides for next year. These method was not consistently applied to all vines and therefore the variation. In future we will add to the data collected the individual pruning method for the vine. All of the vineyard that we collected data from are commercial facilities and they have some inconsistencies like the one mentioned, sometimes they leave cordons and sometimes they take entire branches instead of just the new growth canes (shoots). These doesn't mean the segmentation is wrong, the differences are due to the automatic segmentation only measuring the weight of new growth while manually the pruning crew will sometimes take more than weight of the vine.

Fig. 9.

Pruning Weight Interpolation Map using Watershed Segmentation on Images With Artificial Background

Results Using Images without artificial Background and Alpha Matte Segmentation

Using the segmentation algorithm described in 2.2 we obtain the pruning weight map in Figure 10.

As it can be seen from the images the interpolation maps is basically equivalent to the one obtained by manually pruning each of the vines, which proves that the automatic segmentation we are proposing works with the great advantage of not needing to use an artificial background or intensive manual labor to create the maps. The small inconsistencies in this map are same as for the images with artificial background and due to same reason, some of the vines were left with two new cordons which means the weight of those are not included on the manual pruning weight but they are evaluated on the automatic image based algorithms.

Fig. 10

Pruning Weight Interpolation Map using Watershed Segmentation on Images With Artificial Background

From Figure 11 we observe that the depth map sensor has trouble creating an accurate depth map of the vine and misses most of the canes (shoots). We think the main problem is that the real sensor was designed to work on indoor environment with constant lightning, but it doesn't work well on outside with variations on the lightning conditions. We need to further test this Intel sensors since the latest version is more accurate and uses lidar technology to create the depth map and the accuracy is of around 10 meters, although all are designed for indoor use mainly. Right now, with version D435, the results cant be used for our project, plus the system is inconvenient to take to the field; which confirms that our original idea of doing this with a regular phone camera is the best option.

Fig. 11.

DepthMap obtained by Using Real Sense Camera

CONCLUSION AND FUTURE WORK

According to the California Department of Food and Agriculture the total in-vestment in AI technology in agriculture was around $5 billions on 2017. This number is expected to grow even more in the next years. The NSF has recently issued a call for grant proposals to found AI Research Institutes in the USA specifically mentioning the track in AI-Driven Innovation in Agriculture and the Food System [14]. Why is it so important to get more AI in agriculture? Recent advances in agricultural management have dramatically improved agriculture around the world. These advances are partially due to the ability to adapt to local factors that influence crop yield such as climate, growing region and soil type. The main reason why farmers do not adapt already to local conditions is because they don't have the knowledge or tools to do precision agriculture. AI techniques will help by automatizing this techniques and therefore making them cheaper and available to small farmers.

In our particular viticulture project, a wide range of plant densities and training/trellis systems are used by growers to optimize harvest practices. A primary consideration when selecting the proper trellis system is the vine vigor. In this project we successfully implemented the segmentation of grape vines to estimate this vigor automatically providing the necessary information to the grower in a timely manner.

The software used (written in python) is still being developed and therefore is not available yet as open source; but if the readers are interested in obtaining a copy for research contact the author of the paper for a copy.

AUTHORS' CONTRIBUTION

All authors collaborated equally.

CONFLICTS OF INTEREST

All authors declare that they have no conflicts of interest.

References

[1] M. Diago, M. Krasnow, M. Bubola, B. Millan, and J. Tardaguila. Assessment of vineyard canopy porosity using machine vision. American Journal of Enology and Viticulture, pp. 229- 238 v. 67, 2016. doi: https://doi.org/10.5344/ajev.2015.15037

[2] M. P. Diago, A. SanzGarcia, B. Millan, J. Blasco, and J. Tardaguila. Assessment of flower number per inflorescence in grapevine by image analysis under field con-ditions. J. Sci. Food Agric., 94, 2014. doi: https://doi.org/10.1002/jsfa.6512

[3] K. Divilov, T. Wiesner-Hanks, P. Barba, L. Cadle-Davidson, and B. I. Reisch. Computer vision for high-throughput quantitative phenotyping: A case study of grapevine downy mildew sporulation and leaf trichomes. Phytopathology, 107(12): 1549-1555., 2017. doi: https://doi.org/10.1094/PHYTO-04-17-0137-R

[4] F. P. Georgiana and S. V. G. Gui. An automatically crown-truck segmentation in tree drawing test. 11th International Symposium on Electronics and Telecommu-nications (ISETC)At: Timisoara, Romania, 2014. doi: https://doi.org/10.1109/ISETC.2014.7010804

[5] R. Girshick, I. Radosavovic, G. Gkioxari, P. Doll 'ar, and K. He. Detectron. https://github.com/facebookresearch/detectron, 2018.

[6] K. He, G. Gkioxari, P. Doll'ar, and R. B. Girshick. Mask R-CNN. CoRR, abs/1703.06870, 2017.

[7] I. Inc. Intel realsense, https://www.intelrealsense.com/. Intel Inc, 4 2020.

[8] W. Ji, X. Meng, Z. Qian, B. Xu, and D. Zhao. Branch localization method based on the skeleton feature extraction and stereo matching for apple harvesting robot. International Journal of Advanced Robotic Systems, 2018. doi: https://doi.org/10.1177/1729881417705276

[9] J. Kirchener. Automatic image-based determination of pruning mass as determinant for yield potential in grape vine management. J. Sci. Food Agric., 94, 2017. doi: https://doi.org/10.1111/ajgw.12243

[10] A. Kornilov and I. Safonov. An overview of watershed algorithm implementations in open source libraries. Journal of Imaging, 4:123, 10 2018. doi: https://doi.org/10.3390/jimaging4100123

[11] J. Long, E. Shelhamer, and T. Darrell. Fully convolutional networks for semantic segmentation. CoRR, abs/1411.4038, 2014.

[12] Y. Majeed, J. Zhang, X. Zhang, L. Fu, M. Karkee, Q. Zhang, and M. D. Whiting. Apple tree trunk and branch segmentation for automatic trellis training using convolutional neural network based semantic segmentation. IFAC-PapersOnLine, 51, 2018. doi: https://doi.org/10.1016/j.ifacol.2018.08.064

[13] K. Maninis, S. Caelles, J. Pont-Tuset, and L. V. Gool. Deep extreme cut: From extreme points to object segmentation. CoRR, abs/1711.09081, 2017.

[14] nsf. National artificial intelligence (ai) research institutes. AI-Driven Innovation in Agriculture and the Food System, 2019.

[15] M. Orr, O. Grillo, G. Venora, and B. G. Seed morphocolorimetric analysis by computer vision: a helpful tool to identify grapevine (vitis vinifera l.) cultivars. Australian Journal of Wine and Grape Research Pages: 508-519, 2015. doi: https://doi.org/10.1111/ajgw.12153

[16] QGIS Development Team. QGIS Geographic Information System. Open Source Geospatial Foundation, 2009.

[17] L. Ravaz. Sur la brunissure de la vigne. Les Comptes Rendus de lAcadmie des Sciences, 136:1276-1278, 10 1903.

[18] C.-H. Teng, Y.-S. Chen, , and W.-H. Hsu. Tree segmentation from an image. Conference: Proceedings of the IAPR Conference on Machine Vision Applications, 2005.

[19] N. Xu, B. L. Price, S. Cohen, and T. S. Huang. Deep image matting. CoRR, abs/1703.03872, 2017.